The naïveté of controlling AI

The people shouting that we must “stop” or heavily restrict AI are naïve in three distinct ways.

First is the *Luddite naïveté* — the belief that AI is some simple job-destroying machine rather than a general-purpose technology, and the fantasy that a panel of experts can somehow centrally manage it. This is the old technophobia dressed up in moral righteousness: fear of change, belief in wise overseers, and a blindness to the economic forces driving innovation.

Second is the *stoppability illusion*. Even if we wanted to halt AI, we couldn’t. The tech is already global, open-source, cheap, and running on commodity hardware. Models fork instantly, weights leak, developers multiply, and rival nations race forward regardless of what the West decides. You cannot pause a planetary-scale technological diffusion any more than you can pause the economy.

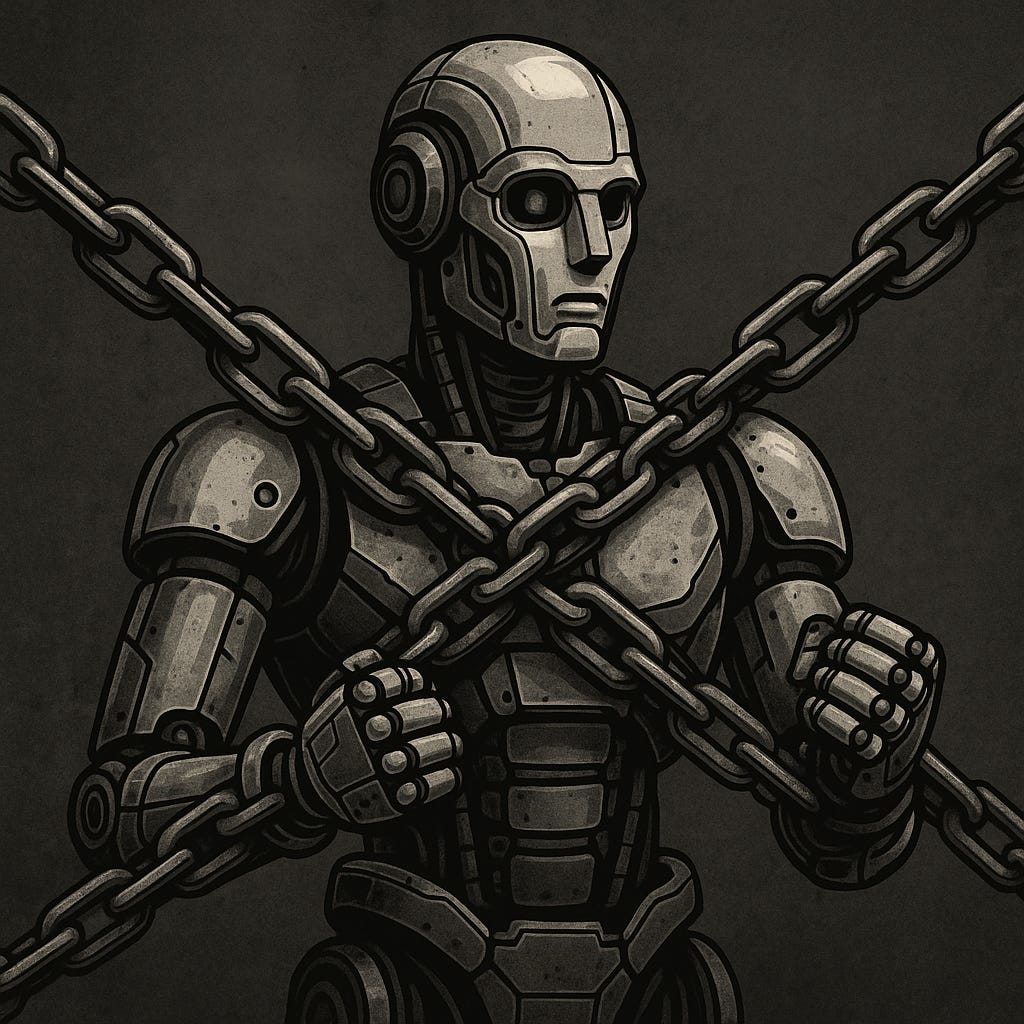

Third is *power-centralization blindness*. Every restriction empowers exactly the actors we should fear most: governments, megacorps, and the already-entrenched. Regulation never stops bad actors; it shackles good ones. It guarantees a world where only elites possess advanced AI while the public is left with sanitized toys. Call it “safety” if you want—functionally it’s a mandate for monopoly.

Together, these three delusions form a comforting story: that AI can be halted, controlled, or limited to the “responsible.” But in reality, attempts to suppress it make AI scarier, not safer, by consolidating it in the hands of the powerful. A fragmented, decentralized ecosystem is the safer path.

If you want a world where AI is aligned with human interests, you don’t get there by banning it—you get there by ensuring it’s open, distributed, competitive, and in the hands of the many, not the few.