LLMs Don’t Steal. People Do.

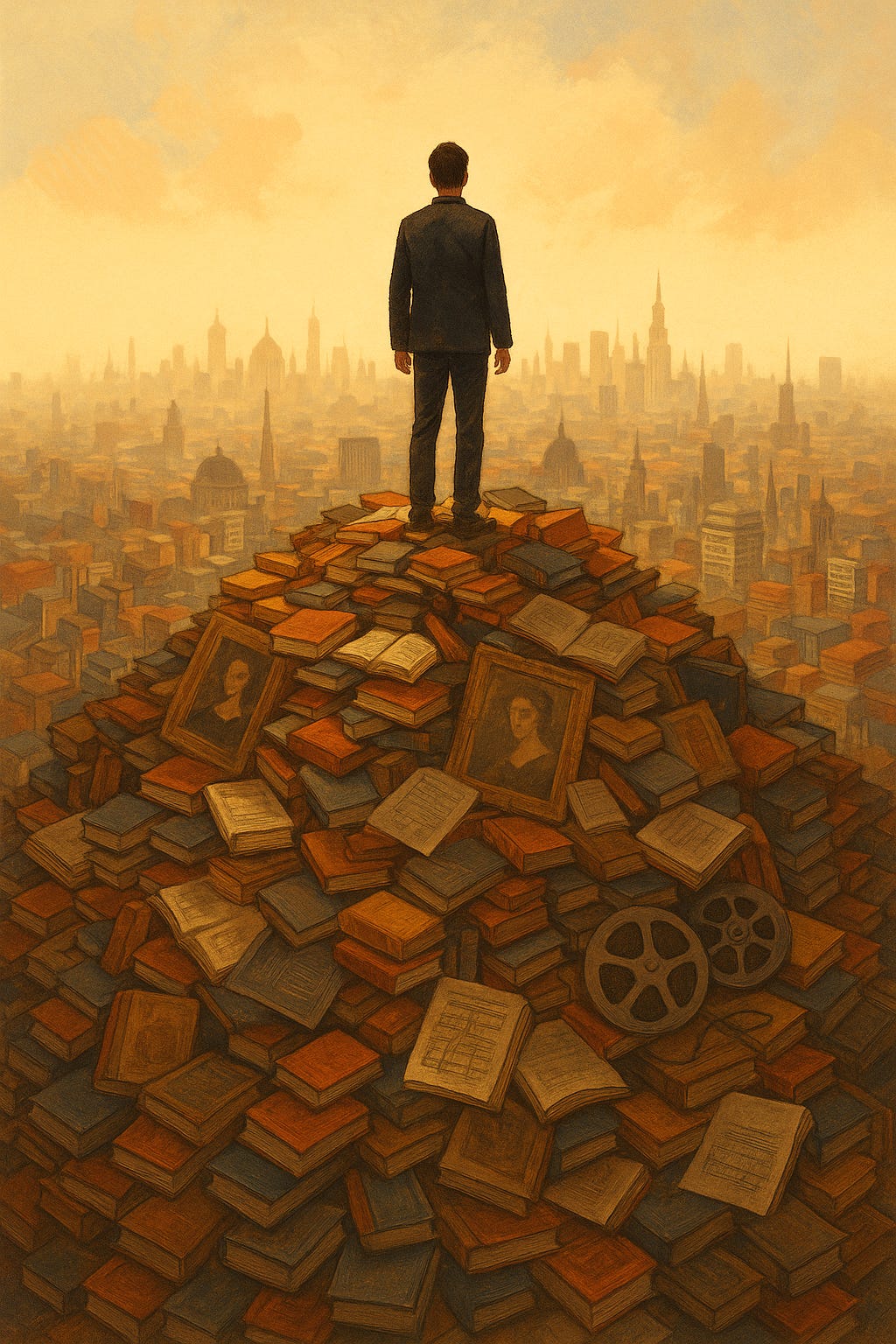

There’s a lot of noise right now about how large language models are “stealing” people’s work. Why? Because they were trained on a mountain of human-made stuff — books, articles, code, blogs, art. And apparently, that’s supposed to be theft.

But that’s just… learning. At scale.

That’s what humans do. We read, we absorb, we remix. We don’t license every book we’ve ever been influenced by. We don’t pay royalties to every scientist or artist who helped shape our thoughts. We stand on shoulders. So do LLMs.

The real question isn’t what the model read. The real question is what you do with it.

Use it to understand what’s been done? Fine. Use it to spot gaps, spark ideas, see new combinations? Great. Use it to write in your own voice, better than before? That’s what tools are for.

But if you use it to knock off someone’s style, mimic their voice, and sell the imitation? That’s theft. Same if a human did it.

The outrage isn’t really about the training data. It’s about the fear that machines might now do too good a job helping people think — and maybe even create. But we don’t ban calculators because they can do math faster than we can. We use them to go further.

LLMs aren’t thieves. They’re lenses. Amplifiers. Force multipliers. What matters is what you point them at.

So let’s be clear:

Theft isn’t in the training. It’s in the mimicry.

If you use a model like a tool, you’re fine.

If you use it like a forger, you’re not.